Overview

This project was a collaborative effort between the UK Open University, Google, and the Institute of Social Dialogue. Its objective was to develop a multi-screen application aimed at promoting social inclusion and addressing issues such as discrimination, bullying, and racism in UK schools. Google.org provided funding, expertise, and support, while the Open University team, consisting of three members, managed the project planning, partnerships, and implementation.

The problem

Many pupils in schools across the UK experience discrimination, bullying, and racism. While teachers are eager to address these issues through discussion and educational materials, younger students often struggle to engage meaningfully in these conversations. This challenge is further compounded by the difficulty teachers face in creating an engaging and constructive environment to explore such sensitive topics in the classroom.

To tackle this, we leveraged virtual reality (VR) technology to create immersive 360-degree videos that simulate real stories of discrimination and bullying, collected from various schools. These VR experiences were made available on multiple screens in schools, allowing children to observe and be fully immersed in these scenarios. As they watch, students can judge the situations and make decisions that influence the storyline. By placing children in the shoes of someone facing discrimination or bullying, the project aimed to raise awareness, spark informal yet engaging discussions, and foster empathy—all achieved through the interactive use of VR in both 3D and 2D modes.

My Role

I was one of the three main team members in leading this project, I was the only person to have computer schience background in the team making me the only person to be able to communicate with the software developers regarding the usability and technical matters. My role in the project was to cover the following tasks:

- Conducted user research through focus groups and interviews with students, teachers, and government partners to understand the problem and gather requirements.

- Performed requirement analysis, designed questionnaires, and analyzed data to inform solution design.

- Collaboratively designed solutions with stakeholders and partners, including participatory design sessions that added interactive and motivating components to the app, resulting in a 550% increase in new users and a 700% increase in user retention.

- Created wireframes and produced interactive 360 VR experiences to address issues of discrimination, bullying, and racism in schools.

- Managed communication and coordination between stakeholders, including researchers, teachers, children, and software developers, ensuring alignment throughout the project.

- Planned and implemented testing and evaluation processes, including usability testing on mobile and desktop platforms, complemented by Google Analytics and server log data analysis.

- Managed iterative design processes, continuously refining the solution until the final product was achieved.

Methods, data collection, and development

Interviews and Focus Groups

We conducted interviews and focus groups with teachers from selected schools who were eager to participate in the project and had firsthand experience with the challenges outlined in our problem definition. In addition, we engaged with potential partners from various sectors, including government representatives, Scotland Yard police, and other organizations, to share insights and explore ways to broaden the project's impact across different contexts.

We also interviewed children to hear their personal stories related to discrimination, bullying, or racism—experiences that aligned with the issues we aimed to address. These interviews and focus groups were invaluable in deepening our understanding of the problem and provided authentic, real-life examples. These narratives were then incorporated into the 360-degree video storyboards, enhancing the relevance and effectiveness of the immersive experiences.

Understanding the technical context

To ensure the final product was usable within its intended environment, I introduced an additional stage focused on understanding the technical context. This involved conducting surveys with over 120 potential users and stakeholders to gather insights into their behaviors with technology, the available equipment in different schools, and how both teachers and students utilize these resources. The findings revealed that, in addition to projectors, Windows laptops, and TVs, many schools were equipped with Android Chromebooks. This insight led us to prioritize Chromebooks as a key target device, alongside smartphones, to maximize accessibility and impact.

Production

We transformed the real-life stories collected during the interview and focus group stages into immersive experiences by collaborating with a production partner to create 360-degree videos. These videos were produced with the participation of children from the schools involved in the project, adding authenticity and relevance to the scenarios.

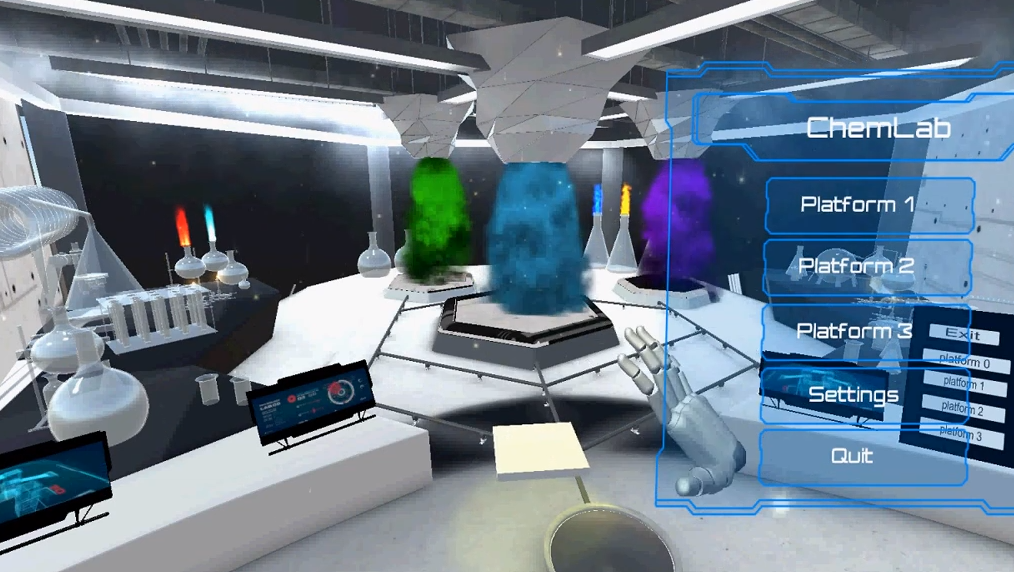

To test and evaluate our approach, we developed a prototype scenario with the help of our software development partners. This scenario was implemented as a mobile application and also made accessible through a WebGL-based web browser interface. Users could engage with the scenarios in either a 3D VR mode or a 2D mode, providing flexible options for different technological environments and user preferences.

Testing and evaluation

Once an early version of the product was completed, I, along with the project manager, traveled to several cities across the UK to visit various schools, test the app, and gather feedback on its usability. This testing phase involved a combination of methods, including direct observation, questionnaires, and focus groups with both students and teachers to gain diverse perspectives on the user experience.

In addition to qualitative feedback, we conducted a quantitative analysis using Google Analytics and in-app data tracking to monitor user journeys. This analysis helped us identify broken scenarios and areas where users were dropping off, revealing challenges in the number of users completing a full story. These insights were critical for refining the app and enhancing user engagement in subsequent iterations.

Testing on differrent screens and recording usability data

During usability testing, our methods were adapted to accommodate various screen sizes and device types, including smartphones, tablets, Chromebooks, projectors, and PC screens. The different modes of usage—2D versus 3D—also influenced how usability data was collected and analyzed. To address these variations, we developed a comprehensive data collection and analysis framework that could be applied across all devices. This structure allowed for consistent data gathering, while also enabling tailored evaluations to account for the unique interactions and experiences associated with each device type.

Choosing the right questions to ask

I collaborated with the programming team to implement various methods for collecting usability data directly within the app. This process began with clearly defining the key questions we wanted to answer and understanding the reasons behind them. With these objectives in mind, we brainstormed effective strategies for gathering the necessary data while navigating several technical constraints. This careful planning ensured that the data collected was both relevant and actionable, providing valuable insights to inform future iterations of the app.

Usability testing (examples from findings)

Our analysis revealed that the product is highly engaging for the majority of pupils. Here are the key usability findings and the subsequent improvements we implemented:

1. VR Usability:

Users had limited control during scenarios due to the absence of buttons. We addressed this by adding more interactive controls.

Users had limited control during scenarios due to the absence of buttons. We addressed this by adding more interactive controls.

2. Dimensional Feedback:

To help users better understand their surroundings, we added a compass at the top of the video.

To help users better understand their surroundings, we added a compass at the top of the video.

3. Rewind Functionality:

Observations showed that children needed to rewind scenarios but struggled to do so. We added a rewind feature to address this.

Observations showed that children needed to rewind scenarios but struggled to do so. We added a rewind feature to address this.

4. Subtitles for 2D Mode:

For group watching in 2D mode, where sound could be unclear, we added subtitles.

For group watching in 2D mode, where sound could be unclear, we added subtitles.

5. 2D Mode Navigation:

Students had difficulty navigating in different directions, expecting the scene camera to move with their fingers, similar to Google Street View. We reversed the camera movement direction to align with their expectations.

Students had difficulty navigating in different directions, expecting the scene camera to move with their fingers, similar to Google Street View. We reversed the camera movement direction to align with their expectations.

6. Interactive Storylines:

Users expressed a desire for more control over the storyline. Drawing on my PhD research in engagement and motivation psychology, we incorporated elements of self-determination theory to make scenarios more interactive, providing positive or neutral feedback and branching storylines based on user decisions.

Users expressed a desire for more control over the storyline. Drawing on my PhD research in engagement and motivation psychology, we incorporated elements of self-determination theory to make scenarios more interactive, providing positive or neutral feedback and branching storylines based on user decisions.

7. User Behavior Insights:

Usability data showed that the initial phase of usage was exploratory, with users trying out different controls. As they became more familiar, they focused more on the story and moved the screen less. To prevent accidental exits, we increased the time required to trigger exit buttons and relocated them to the top of the screen, making them accessible only when intentionally sought.

Usability data showed that the initial phase of usage was exploratory, with users trying out different controls. As they became more familiar, they focused more on the story and moved the screen less. To prevent accidental exits, we increased the time required to trigger exit buttons and relocated them to the top of the screen, making them accessible only when intentionally sought.

These improvements have significantly enhanced the user experience, making the product more intuitive and engaging for pupils.

Questionaires

We also employed questionnaires to gather feedback from both children and teachers about their personal experiences with the product, as well as their observations of others using it. The results from these questionnaires, along with other data collection methods, are depicted in the diagrams at the end of this article.

Reflect and improve (Participatory Design)

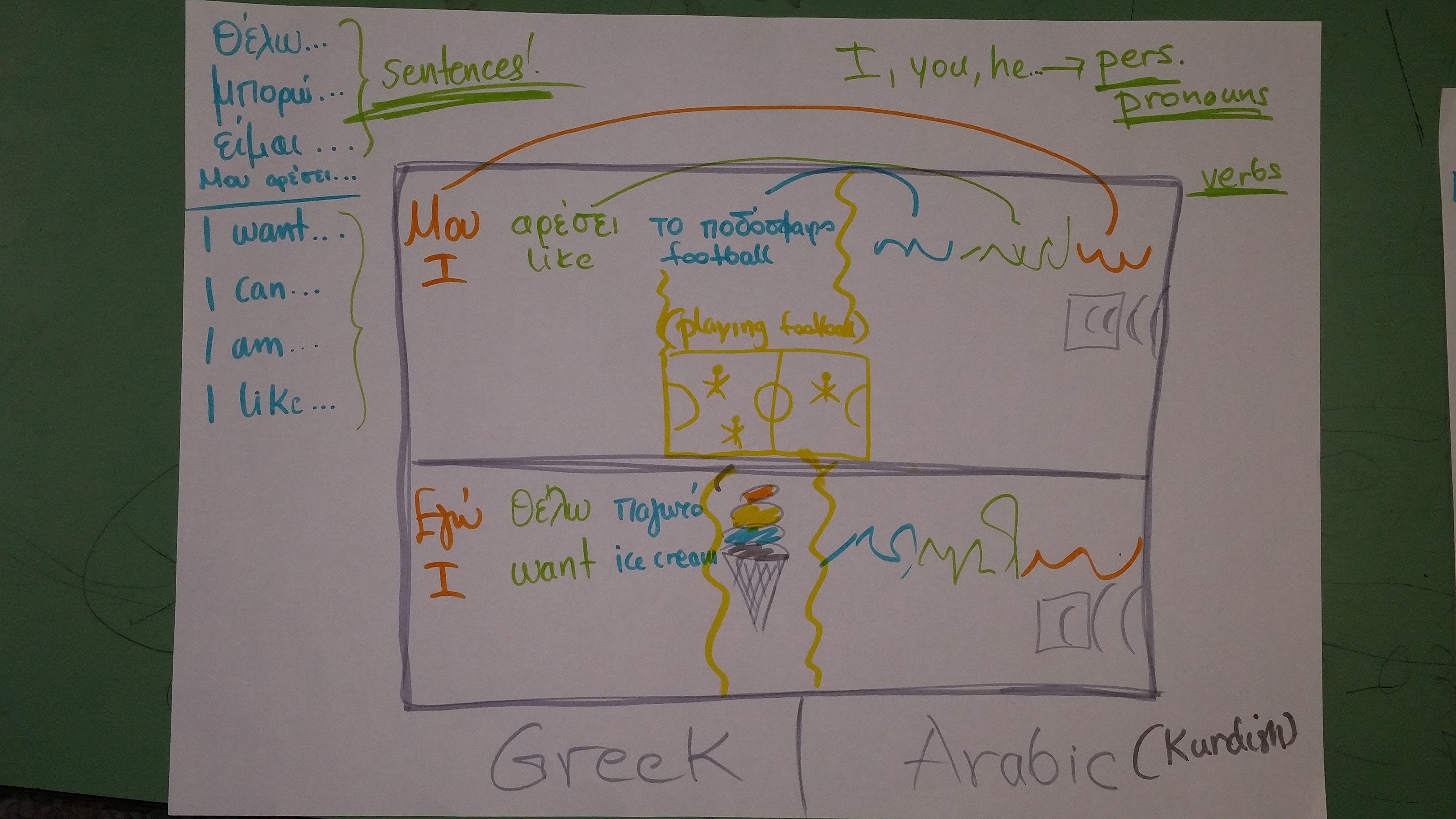

After collecting evaluation data from the first scenario, we initiated co-design sessions with some users to identify tools that could enhance interaction. The feedback highlighted the need for greater autonomy, more control over the scenario, and increased decision-making opportunities. These insights were corroborated by analytics data, which showed that many users did not complete the videos.

Based on these findings, we hypothesised that increasing interactivity and incorporating gamification elements would boost user engagement. This hypothesis was then tested in a design workshop. During the participatory design workshops, users proposed several ideas, many of which aligned with our design hypothesis and focused on gamification and interaction.

As a result, the videos were digitally reproduced to include several pauses and decision-making points throughout the story. Each decision impacts the storyline, creating a more engaging and cost-effective production process.

Release

After several iterations of the design process, we concluded with the final version of the program which was published online on Google store, iOS, and the Open University online platform (OpenLearn).

Challenges

Requirement gathering:

Initially, the project requirements were straightforward. However, after completing the first version of the product, capturing requirements became more challenging, especially from participants with limited technology skills who struggled to articulate their needs. Co-design workshops proved invaluable, allowing users to express their needs practically. Observations also helped capture unspoken requirements. Ice-breaking activities and relationship-building exercises further encouraged teachers and children to voice their ideas confidently, even if they seemed naïve.

Technical constraints:

We encountered several technical constraints related to device compatibility and the rendering of 360-degree videos. To address this, we developed a WebGL alternative, making the scenarios accessible on various screens. Additionally, we optimised the application for better performance on low-spec devices.

Many teachers and children lacked the technical skills to operate the provided VR headsets, mobile phones, and chargers. To mitigate this, I designed a Digital Toolkit that detailed the project aims, usage instructions, and required technical knowledge. This toolkit, published alongside the product, became a valuable reference for teachers who later contributed to its improvement.

The user experience varied across different smartphone models, tablets, and screen sizes. Collecting data from multiple devices was time-consuming but essential for usability testing. Integrating usability data collection with the application code posed significant challenges. We prioritised the most critical data and sought the simplest collection methods. When integration was not feasible, we relied on alternative methods such as observations, interviews, and questionnaires.

Time:

Managing time was particularly challenging due to tight deadlines and a limited budget. This was evident during the testing phase of the first scenario, while the production company simultaneously began working on the remaining scenarios. We adopted an agile approach, creating the first scenario with minimal functionality to gather feedback quickly, which informed the production of subsequent scenarios.

Time constraints prevented us from implementing all the identified needs from usability testing and co-design workshops, such as adding subtitles to the scenarios. In hindsight, early usability testing with any available videos or similar apps would have been beneficial, but various factors made this impractical at the time.

Additional Links

BBC Report featuring the project

Digital Toolkit PDF, authored by George Alain

Open Learn website (WebGl + Android & iOS links)